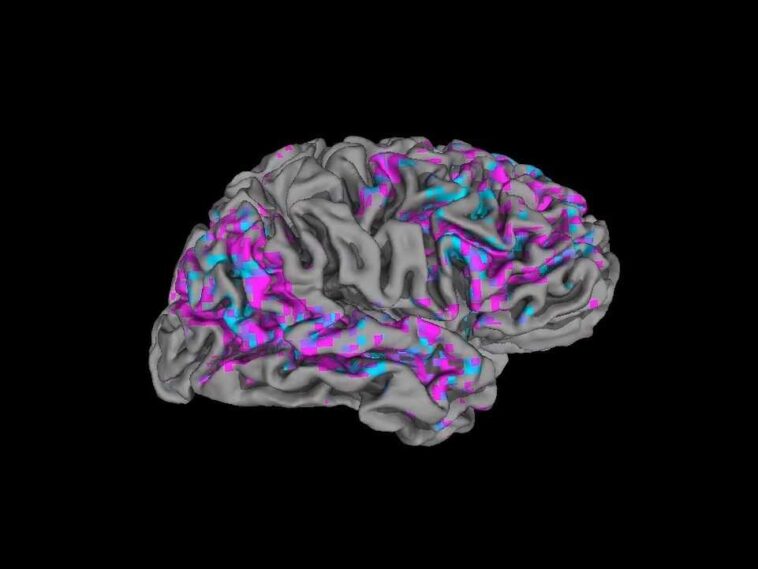

This video still shows a view of a person’s cerebral cortex. Pink areas are active above average; blue areas show below-average activity.

Jerry Tang and Alexander Huth

Hide caption

toggle caption

Jerry Tang and Alexander Huth

This video still shows a view of a person’s cerebral cortex. Pink areas are active above average; blue areas show below-average activity.

Jerry Tang and Alexander Huth

Scientists have found a way to decode a stream of words in the brain using MRI scans and artificial intelligence.

The system reconstructs the essence of what a person hears or imagines, rather than trying to repeat every word, a team reports in the Journal nature neuroscience.

“It’s about understanding the ideas behind the words, the semantics and the meaning,” says Alexander Huth, author of the study and an assistant professor of neuroscience and computer science at the University of Texas at Austin.

However, this technology cannot read minds. It only works if a participant is actively collaborating with scientists.

Still, systems that decode speech could one day help people who are unable to speak due to brain injury or illness. They also help scientists understand how the brain processes words and thoughts.

Previous attempts to decode speech relied on sensors placed directly on the surface of the brain. The sensors pick up signals in areas involved in the articulation of words.

But the Texas team’s approach is an attempt to “unlock more free-form thought,” says Marcel Just, a psychology professor at Carnegie Mellon University who was not involved with the new research.

That could mean there are applications beyond communications, he says.

“One of the greatest scientific medical challenges is understanding mental illness, which is ultimately a dysfunction of the brain,” says Just. “I think this general approach will solve this mystery one day.”

Podcasts in the MRI

The new study came as part of efforts to understand how the brain processes language.

The researchers had three people each spend up to 16 hours in a working MRI scanner, which detects signs of activity throughout the brain.

Participants wore headphones that streamed audio from podcasts. “Mostly they just lay there and listened to stories The moth radio hour‘ says Huth.

These streams of words generated activity throughout the brain, not just in areas associated with speech and language.

“It turns out that a large part of the brain is doing something,” says Huth. “So areas that we use for navigation, areas that we use for mental arithmetic, areas that we use to process how things feel like touching them.”

After the participants listened to stories in the scanner for hours, the MRI data was sent to a computer. It learned to associate certain patterns of brain activity with specific streams of words.

Next, the team had the participants listen to new stories in the scanner. Then the computer tried to reconstruct these stories from each participant’s brain activity.

The system received a lot of help in constructing understandable sentences from artificial intelligence: an early version of the famous natural language processing program ChatGPT.

What emerged from the system was a paraphrased version of what a participant had heard.

So if a participant heard the sentence: “I didn’t even have a driver’s license,” the decoded version could read: “She hadn’t even learned to drive a car,” says Huth. In many cases, he says, the decrypted version contained errors.

In another experiment, the system was able to paraphrase words that a person had just imagined saying.

In a third experiment, participants watched videos that told a story without using words.

“We didn’t tell the subjects to try to describe what was happening,” says Huth. “And yet we have this kind of linguistic description of what’s going on in the video.”

A non-invasive window on language

The MRI approach is currently slower and less accurate than an experimental communications system being developed by a team led by Dr. Edward Chang at the University of California, San Francisco for people who are paralyzed.

“People get a plate of electrical sensors that’s implanted right on the surface of the brain,” says David Moses, a researcher in Chang’s lab. “It records brain activity very close to the source.”

The sensors detect activity in areas of the brain that normally give voice commands. At least one person was able to use the system to generate 15 words per minute accurately using only their mind.

But with an MRI-based system, “nobody needs surgery,” says Moses.

Neither approach can be used to read a person’s mind without their cooperation. In the Texas study, people were able to defeat the system simply by telling themselves a different story.

However, future versions could raise ethical questions.

“It’s very exciting, but also a bit scary,” says Huth. “What if you could read the word someone is thinking in their head right now? It’s potentially a harmful thing.”

Moses agrees.

“The point here is that the user has a new way of communicating, a new tool that is completely under their control,” he says. “That’s the goal and we have to make sure it stays that way.”

#decoder #brain #scans #time