Long before Elon Musk and Apple co-founder Steve Wozniak signed a letter warning that artificial intelligence posed “profound risks” to humanity, British theoretical physicist Stephen Hawking had sounded the alarm about the rapidly evolving technology.

“The development of full artificial intelligence could spell the end of humanity,” Hawking said in a 2014 interview with the BBC.

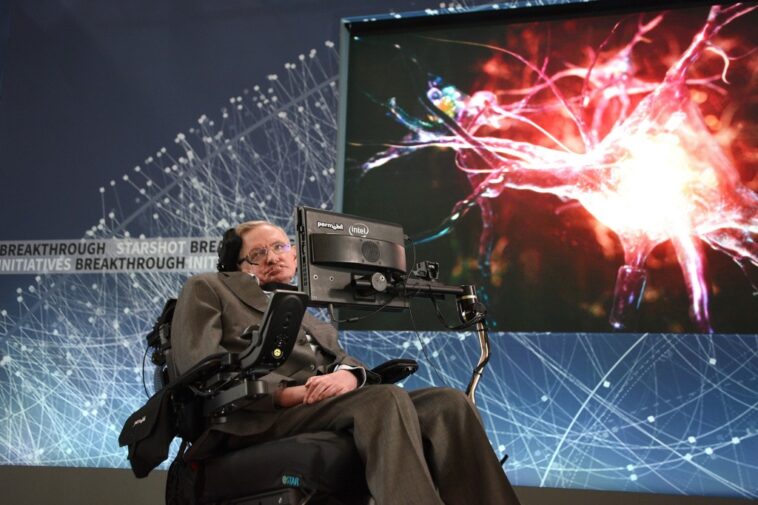

Hawking, who suffered from amyotrophic lateral sclerosis (ALS) for more than 55 years, died in 2018 at the age of 76. Despite being critical of AI, he also used a very simple form of technology to communicate with his illness, which weakened muscles and forced Hawking to use a wheelchair.

Unable to speak by 1985, Hawking relied on various avenues of communication, including an Intel-powered speech generation device that allowed him to use facial movements to select words or letters that were synthesized into speech.

Hawking’s comment to the BBC in 2014 that AI “could spell the end of the human race” was in response to a question he was citing about a possible overhaul of language technology. He told the BBC that very simple forms of AI have already proven powerful, but creating systems that rival or surpass human intelligence could be disastrous for the human race.

“It would take off on its own and reshape itself at an ever-increasing rate,” he said.

“Humans constrained by slow biological evolution would not be able to keep up and would be displaced,” Hawking added.

Hawking’s last book came out months after his death. His book, titled Short Answers to the Big Questions, provided readers with answers to questions he was frequently asked. The science book detailed Hawking’s argument against the existence of God, how humans will likely one day live in space, and his fears about genetic engineering and global warming.

Artificial intelligence also took a top spot on his list of “big questions,” arguing that computers “are likely to overtake humans in intelligence within 100 years.”

“We may face an intelligence explosion, eventually leading to machines more intelligent than ours than snails,” he wrote.

He argued that computers need to be trained to align with human goals, adding that not taking the risks associated with AI seriously may be “our worst mistake ever.”

“It’s tempting to dismiss the notion of highly intelligent machines as mere science fiction, but that would be a mistake – and possibly our worst mistake ever.”

Hawking’s comments echo concerns expressed earlier this year by tech giant Elon Musk and Apple co-founder Steve Wozniak in a letter published in March. The two tech leaders, along with thousands of other experts, signed a letter calling for a minimum six-month hiatus in building AI systems more powerful than OpenAI’s GPT-4 chatbot.

“AI systems with competitive human intelligence can pose profound risks to society and humanity, as demonstrated by extensive research and recognized by leading AI laboratories,” reads the letter, published by non-profit organization Future of Life.

OpenAI’s ChatGPT became the fastest growing user base in January with 100 million monthly active users as people around the world rushed to use the chatbot, which simulates human-like conversations based on prompts. The lab released the latest iteration of the platform, GPT-4, in March.

Despite calls to pause research in AI labs working on technologies that would outperform GPT-4, the system’s release was a game changer that resonated throughout the tech industry, catapulting various companies to start building their own AI systems to compete.

Google is working on revamping its search engine and even creating a new one powered by AI; Microsoft launched the “new search engine Bing,” billed as users’ “AI-powered co-pilot for the web”; and Musk said he will introduce a competing AI system, which he described as “maximum truth-seeking.”

Hawking advised the year before his death that the world “must learn how to prepare for and avoid the potential risks” associated with AI, arguing that the systems are “the worst event in the history of our civilization.” could”. However, he noted that the future is still unknown and, with proper training, AI could prove beneficial to humanity.

“Success in creating effective AI could be the greatest event in the history of our civilization. Or the worst. We just don’t know. So we have no way of knowing whether AI will help us infinitely, or whether it will be ignored and marginalized, or possibly destroyed,” Hawking said during a speech at the Web Summit technology conference in Portugal in 2017.

#Stephen #Hawking #warned #mankind #years #leading #death